In about three weeks, Localogy will hold its next conference, Localogy Place: The Real World Metaverse. Deviating from common metaverse connotations – involving online synchronous 3D worlds – it’s all about how digital content and data are adding depth and dimension to the places & spaces around us.

In that spirit, we’re gearing up for the show by immersing ourselves in the topic, excuse the pun. So for the latest Video Vault post, we’re spotlighting Google’s efforts to build a real-world metaverse. Specifically, its Lens and Live View features add digital layers of content to the world around us.

We’ve examined these tools in the past, but Google recently took things to the next level. At its recent I/O conference, Google launched the ARCore Geospatial API. This takes the underlying architecture and capabilities of its Live View AR navigation and spins it out for anyone to build geo-spatial AR apps.

Check out the session video below, preceded by our summary notes and strategic takeaways. Hope to see you in 3 weeks in NYC…

What’s Driving Google’s Interest in Visual Search & Navigation?

Environmental Interaction

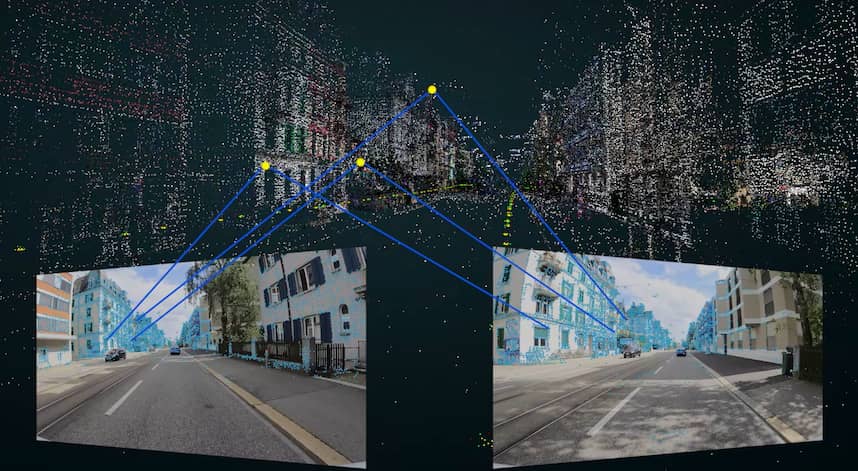

Before going into details of the Geospatial AR API, some background is in order. For AR to work, it needs a dimensional map of a given space. Of course, there’s rudimentary AR like early versions of Pokémon Go, but that involves “floating stickers” without environmental interaction.

Similarly, with AR navigation such as Google Live View, AR devices must localize themselves, before they can know where to place directional arrows… and not lead people off a cliff. Whether it’s gaming, navigation, or other AR use cases, this environmental understanding is critical.

Google is uniquely positioned here because it has served for the past 20+ years as the world’s search engine. Its knowledge graph contains indexed images, which fuel the AI behind Google Lens. And Street View Imagery provides visual positioning and localization for AR navigation.

With that backdrop, Google has taken this capability and spun it out for developers. And that brings us back to the geospatial API. It lets developers build AR apps around Google’s geo-local AR framework so they can hit the ground running with the work that Google has already done.

Crowdsourced Creativity

The outcome, like Niantic’s work with Lightship (a speaker at Localogy Place), will be scale and crowdsourced creativity. Google can only build so many AR apps. By putting the tools in the hands of developers, they’ll come up with creative use cases – from gaming to restaurant discovery.

Here, Google points to a few use cases that could be prime areas of development for geospatial AR. They include ridesharing and micro-mobility. For example, directional arrows and overlays can tell you where to pick up your scooter, or visually highlight your arriving Uber in a crowded area.

Another use case is what Google calls location-based activations. These involve experiences at a music festival, sporting venue, or airport. The idea is to provide wayfinding and other promotions in and around these venues to help customers and attendees deepen their experiences.

Lastly, Google points to gaming and self-expression as areas that the geospatial API can empower. These include AR scavenger hunts or Pokémon Go-like experiences. It’s also about artwork and generally adding digital depth to real-world waypoints for others to enjoy.

We’ll pause there and cue the full video below, including drill-downs on how the software works. Stay tuned for next week when we’ll dive into Niantic’s parallel efforts…