After initially getting lots of grief for being late to the generative AI party – despite years of research and positioning around AI – Google has sprung back with rapid-fire rollouts. That includes a procession of AI announcements at Google I/O, followed by several more search-enhancing AI moves.

Now it’s back again with a handful of AI integrations (as we predicted). These include new AI-powered travel search features and AI-assisted product search. Another feature lets users dimensionally fit clothing onto a diverse range of models in 2D images, similar in some ways to generative AI artwork.

As we’ve examined over the past few months, including the latest episode of This Week in Local with Yext’s Christian Ward, Google is well-positioned for AI. Though it’s saddled by an innovator’s dilemma to not cannibalize its core product, years of knowledge graph indexing primes it for the age of AI.

Ep. 25 of This Week in Local Explores Emerging Tech with Yext’s Christian Ward

Testing the Waters

Taking this week’s AI updates one at a time, Google’s new travel search feature offers a more conversational ChatGPT-like experience. This invites users to make natural-language queries like “What time of year is best to go to Thailand?” or “Is this restaurant good for a kid’s birthday party?”

The latter – an example given by Google itself – broadens the definition of “travel” to include local search. In either case, the results served to users will tap into a variety of Google data silos including Google Business Profiles (formerly Google My Business), and other relevant sources for travel queries.

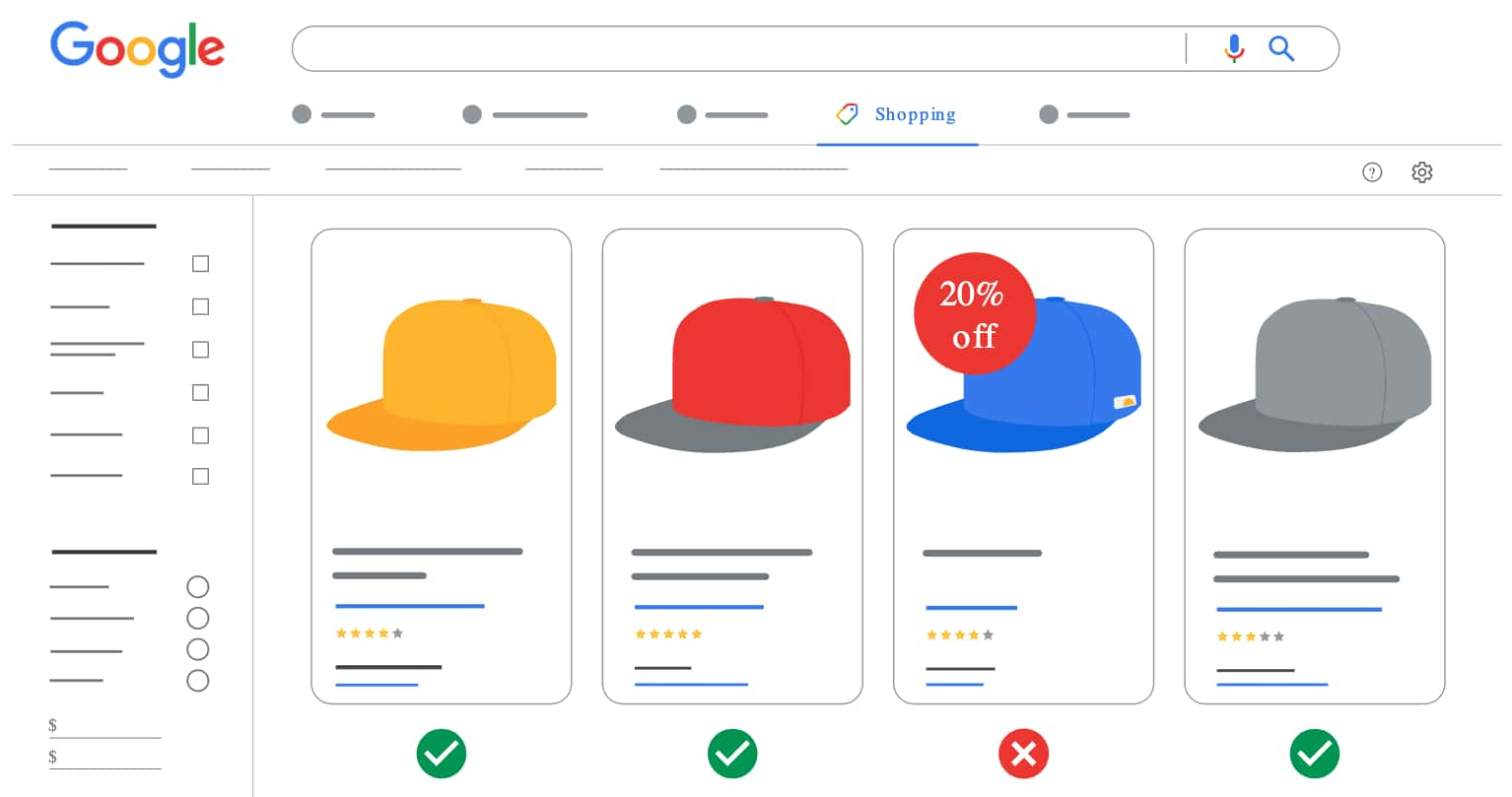

As for the product search updates teased above, they’re very similar to the travel search updates, but focused on product-level info. The same natural language search functionality is offered, pulling content from Google Shopping including descriptions, reviews, prices, images, and recommendations.

Both moves above fall under the umbrella of Google’s new Search Generative Experience (SGE). This is an experimental set of products (very Google) to test the waters of AI-based user experiences. And again Google is advantaged here, to the tune of 200 million place listings and 35 billion product listings.

Confident Consumers

Lastly, as noted, Google is launching a new shopping feature that will simulate style and fit for fashion items. This is pursuant to the goal of giving shoppers more confidence in their eCommerce decisions. As demonstrated by AR try-ons, that confidence translates to higher conversions and fewer returns.

Speaking of AR try-ons, the spirit here is similar, but the execution is different. Rather than overlaying 3D digital representations of products on your own body, simulated fitting is done through more traditional 2D images. This doesn’t make it any less sophisticated, as there’s lots of AI working in the background.

Specifically, Google applies a diffusion-based model to generate realistic garment images (predicting physics like draping and folding) worn by models that represent an inclusive range of body types. The feature is available for launch partners like H&M and Everlane, activated by a new “Try On” badge.

This approach makes it less intimidating to most consumers than AR try-ons. Google knows that adding emerging tech into people’s lives should occur gradually. Here, it’s integrating AI in small doses in the 2D formats they’re comfortable with. Given growing fears of AR generally, comfort is the name of the game.